pylops.TorchOperator¶

- class pylops.TorchOperator(*args, **kwargs)[source]¶

Wrap a PyLops operator into a Torch function.

This class can be used to wrap a pylops operator into a torch function. Doing so, users can mix native torch functions (e.g. basic linear algebra operations, neural networks, etc.) and pylops operators.

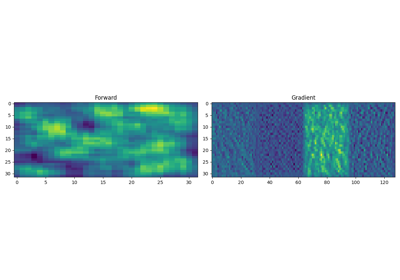

Since all operators in PyLops are linear operators, a Torch function is simply implemented by using the forward operator for its forward pass and the adjoint operator for its backward (gradient) pass.

- Parameters

- Op

pylops.LinearOperator PyLops operator

- batch

bool, optional Input has single sample (

False) or batch of samples (True). Ifbatch==Falsethe input must be a 1-d Torch tensor, if batch==False` the input must be a 2-d Torch tensor with batches along the first dimension- device

str, optional Device to be used when applying operator (

cpuorgpu)- devicetorch

str, optional Device to be assigned the output of the operator to (any Torch-compatible device)

- Op

Methods

__init__(Op[, batch, device, devicetorch])Initialize this LinearOperator.

adjoint()Hermitian adjoint.

apply(x)Apply forward pass to input vector

apply_columns(cols)Apply subset of columns of operator

cond([uselobpcg])Condition number of linear operator.

conj()Complex conjugate operator

div(y[, niter, densesolver])Solve the linear problem \(\mathbf{y}=\mathbf{A}\mathbf{x}\).

dot(x)Matrix-matrix or matrix-vector multiplication.

eigs([neigs, symmetric, niter, uselobpcg])Most significant eigenvalues of linear operator.

matmat(X)Matrix-matrix multiplication.

matvec(x)Matrix-vector multiplication.

reset_count()Reset counters

rmatmat(X)Matrix-matrix multiplication.

rmatvec(x)Adjoint matrix-vector multiplication.

todense([backend])Return dense matrix.

toimag([forw, adj])Imag operator

toreal([forw, adj])Real operator

tosparse()Return sparse matrix.

trace([neval, method, backend])Trace of linear operator.

transpose()Transpose this linear operator.